/ home / newsletters /

Bitcoin Optech Newsletter #340

This week’s newsletter announces a fixed vulnerability affecting LDK,

summarizes discussion about zero-knowledge gossip for LN channel

announcements, describes the discovery of previous research that can be

applied to finding optimal cluster linearizations, provides an update on

the development of the Erlay protocol for reducing transaction relay

bandwidth, looks at tradeoffs between different scripts for implementing

LN ephemeral anchors, relays a proposal for emulating an OP_RAND

opcode in a privacy-preserving manner with no consensus changes

required, and points to renewed discussion about lowering the minimum

transaction feerate.

News

-

● Channel force closure vulnerability in LDK: Matt Morehouse posted to Delving Bitcoin to announce a vulnerability affecting LDK that he responsibly disclosed and which was fixed in LDK version 0.1.1. Similar to Morehouse’s other recently disclosed vulnerability in LDK (see Newsletter #339), a loop in LDK’s code terminated the first time it handled an unusual occurrence, preventing it from handling additional occurrences of the same problem. In this case, it could result in LDK failing to settle pending HTLCs in open channels, ultimately leading honest counterparties to force close the channels so that they could settle the HTLCs onchain.

This likely couldn’t lead to direct theft, but it could result in the victim paying fees for the closed channels, paying fees to open new channels, and reduce the victim’s ability to earn forwarding fees.

Morehouse’s excellent post goes into additional detail and suggests how future bugs from the same underlying cause might be avoided.

-

● Zero-knowledge gossip for LN channel announcements: Johan Halseth posted to Delving Bitcoin with an extension to the proposed 1.75 channel announcement protocol that would allow other nodes to verify that a channel was backed by a funding transaction, preventing multiple cheap DoS attacks, but without revealing which UTXO is the funding transaction—enhancing privacy. Halseth’s extension builds on his previous research (see Newsletter #321) that uses utreexo and a zero-knowledge (ZK) proof system. It would be applied to MuSig2-based simple taproot channels.

The discussion focused on the tradeoffs between Halseth’s idea, continuing to use non-private gossip, and alternative methods for generating the ZK proof. Concerns included ensuring that all LN nodes can quickly verify proofs and the complexity of the proof and verification system since all LN nodes will need to implement it.

Discussion was ongoing at the time this summary was written.

-

● Discovery of previous research for finding optimal cluster linearization: Stefan Richter posted to Delving Bitcoin about a research paper from 1989 he found that has a proven algorithm that can be used to efficiently find the highest-feerate subset of a group of transactions that will be topologically valid if the subset is included in a block. He also found a C++ implementation of several algorithms for similar problems that “are supposed to be even faster in practice”.

Previous work on cluster mempool focused on making it easy and fast to compare different linearizations so that the best one could be used. This would allow using a fast algorithm to immediately linearize a cluster, with a slower but more optimal algorithm able to run on spare CPU cycles. However, if the 1989 algorithm for the maximum-ratio closure problem, or another algorithm for that problem, can run fast enough, it could be used all of the time instead. But, even if it was moderately slow, it could still be used as the algorithm that runs on spare CPU cycles.

Pieter Wuille replied with excitement and multiple follow-up questions. He also described a new cluster linearization algorithm that the cluster mempool working group has been developing based on a discussion from Bitcoin Research Week with Dongning Guo and Aviv Zohar. It converts the problem to one that can be addressed using linear programming and appears to be fast, easy to implement, and produces an optimal linearization—if it terminates. However, proof is needed that it will terminate (and in a reasonable amount of time).

Although not directly related to Bitcoin, we found interesting Richter’s description of how he found the 1989 paper using the DeepSeek reasoning LLM.

At the time of writing, discussion was ongoing and additional papers about the problem domain were being explored. Richter wrote, “It appears that our problem, or rather, its generalized solution which is called source-sink-monotone parametric min-cut has applications in something called polygon aggregation for map simplification and other topics in computer vision.”

-

● Erlay update: Sergi Delgado made several posts to Delving Bitcoin about his work over the past year implementing Erlay for Bitcoin Core. He starts with an overview of how existing transaction relay (called fanout) works and how Erlay proposes changing that. He notes that some fanout is expected to remain even in a network where every node supports Erlay, as “fanout is more efficient and considerably faster than set reconciliation, provided the receiving node does not know about the transaction being announced.”

Using a mix of fanout and reconciliation requires choosing when to use each method and which peers to use them with, so his research has focused on making the optimal choices:

-

● Filtering based on transaction knowledge examines whether a node should include a peer in its plans to fanout a transaction even if it knows that peer already has the transaction. For example, our node has ten peers; three of those peers have already announced a transaction to us. If we want to randomly choose three peers to further fanout that transaction, should we select from all ten peers or just the seven peers that haven’t announced the transaction to us? Surprisingly, simulation results show that “there is no significant difference between [the options]”. Delgado explores this surprising result and concludes that all peers should be considered (i.e., no filtering should be done).

-

● When to select fanout candidate peers examines when a node should choose which peers will receive a fanout transaction (with the remainder using Erlay reconciliation). Two options are considered: shortly after a node validates a new transaction and queues it for relay, and when it’s time for that transaction to be relayed (nodes don’t relay transactions immediately: they wait a small random amount of time to make it harder to probe the network topology and guess which node originated a transaction, which would be bad for privacy). Again, simulation results show that “there are no meaningful differences”, although the “results may vary […] in networks with partial [Erlay] support”.

-

● How many peers should receive a fanout examines the fanout rate. A higher rate accelerates transaction propagation but reduces bandwidth savings. Besides testing the fanout rate, Delgado also tested increasing the number of outbound peers, as that’s one of the goals of adopting Erlay. The simulation shows the current Erlay approach reduced bandwidth by approximately 35% using current outbound peer limits (8 peers), and 45% using 12 outbound peers. However, the transaction latency is increased by about 240%. Many other tradeoffs are graphed in the post. In addition to the results being helpful for choosing current parameters, Delgado notes that they’ll be useful for evaluating alternative fanout algorithms that might be able to make better tradeoffs.

-

● Defining the fanout rate based on how a transaction was received examines whether the fanout rate should be adjusted depending on whether a transaction was first received via fanout or reconciliation. Further, if it should be adjusted, what adjusted rate should be used? The idea is that fanout is faster and more efficient as a new transaction begins being relayed through the network, but it leads to wasted bandwidth after a transaction has already reached most nodes. There’s no way for a node to directly determine how many other nodes have already seen a transaction, but if the peer that first sent it a transaction used fanout rather than waiting for the next scheduled reconciliation, then it’s more likely that the transaction is in the early stage of propagation. This data can be used to moderately increase the node’s own fanout rate for that transaction to help it propagate faster. Delgado simulated the idea and found a modified fanout ratio that decreases propagation time by 18% with only a 6.5% increase in bandwidth over the control result that uses the same fanout rate for all transactions.

-

-

● Tradeoffs in LN ephemeral anchor scripts: Bastien Teinturier posted to Delving Bitcoin to ask for opinions about what ephemeral anchor script should be used as one of the outputs to TRUC-based LN commitment transactions as a replacement for existing anchor outputs. The script used determines who can CPFP fee bump the commitment transaction (and under what conditions they can bump). He presented four options:

-

● Use a pay-to-anchor (P2A) script: this has a minimal onchain size but means that all trimmed HTLC value will probably go to miners (like it presently does).

-

● Use a single-participant keyed anchor: this may allow some excess trimmed HTLC value to be claimed by a participant who voluntarily accepts waiting a few dozen blocks before being able to spend the money they close out of a channel. Anyone who wants to force close a channel must wait that time anyway. However, neither party to the channel can delegate paying the fee to a third-party without allowing that party to steal all of their channel funds. If both you and your counterparty compete to claim the excess value, it will likely all go to miners anyway.

-

● Use a dual-keyed anchor: this allows each participant to claim excess trimmed HTLC value without having to wait for additional blocks before being able to spend. However, it does not allow delegation. The two parties to a channel can still compete with each other.

In replies to the post, Gregory Sanders noted that the different schemes could be used at different times. For example, P2A could be used when there were no trimmed HTLCs, and one of the keyed anchors could be used other times. If the trimmed value was above the dust threshold, that value could be added to the LN commitment transaction instead of an anchor output. Additionally, he warned that it could create “new weirdness [a] counterparty may be tempted to ramp up the trimmed amount and take it themselves.” David Harding extended on this theme in a later post.

Antoine Riard warned against using P2A due to the risk of encouraging miner transaction pinning (see Newsletter #339).

-

-

● Emulating OP_RAND: Oleksandr Kurbatov posted to Delving Bitcoin about an interactive protocol that allows two parties to make a contract that will pay out in a way that neither can predict, which is functionally equivalent to randomly. Previous work on probabilistic payments in Bitcoin has used advanced scripts, but Kurbatov’s approach uses specially constructed public keys that allows the winner to spend the contracted funds. This is more private and may allow greater flexibility.

Optech wasn’t able to fully analyze the protocol, but we didn’t spot any obvious problems. We hope to see additional discussion of the idea—probabilistic payments have multiple applications, including allowing users to send amounts onchain that would otherwise be uneconomical, such as for trimmed HTLCs.

-

● Discussion about lowering the minimum transaction relay feerate: Greg Tonoski posted to the Bitcoin-Dev mailing list about lowering the default minimum transaction relay feerate, a topic that has been discussed repeatedly (and summarized by Optech) since 2018—most recently in 2022 (see Newsletter #212). Of note, a recently disclosed vulnerability (see Newsletter #324) did reveal a potential problem that could have affected users and miners who lowered the setting in the past. Optech will provide updates if there is significant further discussion.

Changing consensus

A monthly section summarizing proposals and discussion about changing Bitcoin’s consensus rules.

-

● Updates to cleanup soft fork proposal: Antoine Poinsot made several posts to the Delving Bitcoin thread about the consensus cleanup soft fork suggesting parameter changes:

-

● Introduce legacy input sigops limit: in a private thread, Poinsot and several other contributors have attempted to produce a block for regtest that will take the longest possible time to validate using known problems in the validation of legacy (pre-segwit) transactions. After research, he found that he could “adapt [the worst block] to be valid under the mitigations originally proposed in 2019” (see Newsletter #36). This leads him to propose a different mitigation: limiting the maximum number of signature operations (sigops) in legacy transactions to 2,500. Each execution of

OP_CHECKSIGcounts as one sigop and each execution ofOP_CHECKMULTISIGcan count as up to 20 sigops (depending on the number of public keys used with it). His analysis shows that this will decrease the worst-case validation time by 97.5%.As with any change of this type, there is a risk of accidental confiscation due to the new rule making previously signed transactions invalid. If you know of someone who requires legacy transactions with more than 2,500 single-sig operations or more than 2,125 keys for multisig operations1, please alert Poinsot or other protocol developers.

-

● Increase timewarp grace period to 2 hours: previously, the cleanup proposal disallowed the first block in a new difficulty period from having a block header time more than 600 seconds before the time of the previous block. This meant that a constant amount of hashrate could not use the timewarp vulnerability to produce blocks faster than once every 10 minutes.

Poinsot now accepts using a 7,200 second (2 hour) grace period, as originally proposed by Sjors Provoost as being much less likely to lead to a miner accidentally producing an invalid block, although it does allow a very patient attacker controlling more than 50% of network hashrate to use the timewarp attack to lower difficulty over a period of months even if actual hashrate remains constant or increases. This would be a publicly visible attack and the network would have months to react. In his post, Poinsot summarizes previous arguments (see Newsletter #335 for our much less detailed summary) and concludes that, “despite the fairly weak arguments in favor of increasing the grace period, the cost of doing so [does] not prohibit erring on the safe side.”

On a thread dedicated to discussing extending the grace period, developers Zawy and Pieter Wuille discussed how the 600 second grace period, which would seem to allow slowly decreasing difficulty to its minimum value, was actually sufficient to prevent more than one small difficulty decrease. Specifically, they looked at the impact of Bitcoin’s off-by-one difficulty adjustment bug and the asymmetry of the erlang distribution on accurately retargetting difficulty. Zawy succinctly concluded, “It’s not that an adjustment for both Erlang and ‘2015 hole’ aren’t needed, it’s that 600 seconds before the previous block isn’t a 600 second lie, it’s a 1200 second lie because we expected a timestamp 600 seconds after it.”

-

● Duplicate transaction fix: following a request for feedback from miners (see Newsletter #332) about potential negative impacts of consensus solutions to the duplicate transactions problem, Poinsot selected a specific solution to include in the cleanup proposal: requiring the height of the previous block be included in each coinbase transaction’s time lock field. This proposal has two advantages: it allows extracting the committed block height from a block without having to parse Script and it allows creating a compact SHA256-based proof of block height (about 700 bytes in the worst case, much less than the worst-case 1 MB proof that would be required now without an advanced proof system).

This change will not affect regular users but it will eventually require miners to update the software they use to generate coinbase transactions. If any miners have concerns about this proposal, please contact Poinsot or another protocol developer.

Poinsot also posted a high-level update on his work and the proposal’s current state to the Bitcoin-Dev mailing list.

-

-

● Request for a covenant design supporting Braidpool: Bob McElrath posted to Delving Bitcoin requesting developers working on covenant designs to consider how their favorite proposal, or a new proposal, could assist in the creation of an efficient decentralized mining pool. The current prototype design for Braidpool uses a federation of signers, where signers receive threshold signing shares based on their contribution of hashrate to the pool. This allows a majority miner (or a collusion of multiple miners constituting a majority) to steal payouts from smaller miners. McElrath would prefer to use a covenant that ensures each miner is able to withdraw funds from the pool in proportion to their contributions. He provides a specific list of requirements in the post; he also welcomes a proof of impossibility.

-

● Deterministic transaction selection from a committed mempool: a thread from April 2024 received renewed attention this past month. Previously, Bob McElrath posted about having miners commit to the transactions in their mempool and then only allowing them to include transactions in their blocks that were deterministically selected from previous commitments. He sees two applications:

-

Globally for all miners: this would eliminate the “risk and liability of transaction selection” in a world where miners are often large corporate entities that need to comply with laws, regulations, and the advice of risk managers.

-

Locally for a single pool: this has most of the advantage of a global deterministic algorithm but doesn’t require any consensus changes to implement. Additionally, it can save a large amount of bandwidth between peers in a decentralized mining pool such as Braidpool, as the algorithm determines which transactions a candidate block must include, so any share produced from that block doesn’t need to explicitly provide any transaction data to pool peers.

Anthony Towns described several potential problems with a global consensus change option: any change to transaction selection would require a consensus change (possibly a hard fork) and anyone who created a non-standard transaction would be unable to get it mined even with the cooperation of a miner. Recent policy changes in the past few years that would’ve required a consensus change include: TRUC, updated RBF policies, and ephemeral anchors. Towns linked to a well-known case where millions of dollars in value were accidentally stuck into a non-standard script, which a cooperative miner was able to unstick.

The rest of the discussion focused on the local approach as conceived for Braidpool. There were no objections and additional discussion on a topic about a difficulty adjustment algorithm (see next item in this section) showed how it could be especially useful for a pool that creates blocks at a much higher rate than Bitcoin, where transaction selection determinism significantly reduces bandwidth, latency, and validation costs.

-

-

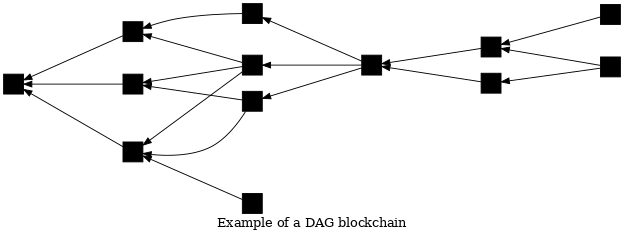

● Fast difficulty adjustment algorithm for a DAG blockchain: developer Zawy posted to Delving Bitcoin about a mining difficulty adjustment algorithm (DAA) for a directed acyclic graph (DAG) type blockchain. The algorithm was designed for use in Braidpool’s peer consensus (not global Bitcoin consensus), however discussion did repeatedly touch on aspects of global consensus.

In Bitcoin’s blockchain, each block commits to exactly one parent; multiple child blocks may commit to the same parent, but only one of them will be considered valid on the best blockchain by a node. In a DAG blockchain, each block may commit to one or more parents and may have zero or more children that commit to it; the DAG best blockchain may consider multiple blocks in the same generation to be valid.

The proposed DAA targets the average number of parents in the last-seen 100 valid blocks. If the average number of parents increases, the algorithm increases difficulty; if there are fewer parents, the difficulty decreases. Targeting an average of two parents gives the fastest consensus, according to Zawy. Unlike Bitcoin’s DAA, the proposed DAA doesn’t need any awareness of time; however, it does require peers to ignore blocks that arrive much later than other blocks in the same generation. It’s not possible to achieve consensus on lateness, so ultimately DAGs with more proof-of-work (PoW) are preferred over those with less PoW; the developer of the DAA, Bob McElrath, analyzed the problem and a possible mitigation.

Pieter Wuille commented that the proposal appears similar to a 2012 idea by Andrew Miller; Zawy agreed and McElrath will update his paper with a citation. Sjors Provoost discussed the complexity of handling DAG chains in Bitcoin Core’s current architecture, but noted that it might be easier using libbitcoin and efficient using utreexo. Zawy extensively simulated the protocol and indicated that he was working on additional simulations for variants of the protocol to find the best mix of tradeoffs.

The last post to the discussion thread was made about a month before this summary was written, but we expect Zawy and Braidpool developers are continuing to analyze and implement the protocol.

Releases and release candidates

New releases and release candidates for popular Bitcoin infrastructure projects. Please consider upgrading to new releases or helping to test release candidates.

-

● BDK Wallet 1.1.0 is a release of this library for building Bitcoin-enabled applications. It uses transaction version 2 by default (improving privacy by allowing BDK transactions to blend in with other wallets that must use v2 transactions due to their support for relative locktimes, see Newsletter #337). It also adds support for compact block filters (see Newsletter #339), in addition to “various bug fixes and improvements”.

-

● LND v0.18.5-beta.rc1 is a release candidate for a minor version of this popular LN node implementation.

Notable code and documentation changes

Notable recent changes in Bitcoin Core, Core Lightning, Eclair, LDK, LND, libsecp256k1, Hardware Wallet Interface (HWI), Rust Bitcoin, BTCPay Server, BDK, Bitcoin Improvement Proposals (BIPs), Lightning BOLTs, Lightning BLIPs, Bitcoin Inquisition, and BINANAs.

-

● Bitcoin Core #21590 implements a safegcd-based modular inversion algorithm for MuHash3072 (see Newsletters #131 and #136), based on libsecp256k1’s implementation while adding support for both 32-bit and 64-bit architectures and specializing for the specific modulus. Benchmark results show an approximate 100× performance improvement on x86_64, reducing MuHash’s computation from 5.8 ms to 57 μs, paving the way for more efficient state validation.

-

● Eclair #2983 modifies routing table synchronization on reconnection to only synchronize channel announcements with the node’s top peers (determined by shared channel capacity) to reduce network overhead. In addition, the default behavior of the synchronization whitelist (see Newsletter #62) has been updated: to disable synchronization with non-whitelisted peers, users must now set

router.sync.peer-limitto 0 (the default value is 5). -

● Eclair #2968 adds support for splicing on public channels. Once the splice transaction is confirmed and locked on both sides, nodes exchange announcement signatures and then broadcast a

channel_announcementmessage to the network. Recently, Eclair added tracking of third-party splices as a prerequisite for this (see Newsletter #337). This PR also disallows the use ofshort_channel_idfor routing on private channels, instead prioritizingscid_aliasto ensure that the channel UTXO isn’t revealed. -

● LDK #3556 improves HTLC handling by proactively failing HTLCs backwards if they are too close to expiration before waiting for an upstream on-chain claim to confirm. Previously, a node would delay failing the HTLC backwards by an additional three blocks to give the claim time to confirm. However, this delay ran the risk of forcibly closing its channel. In addition, the

historical_inbound_htlc_fulfillsfield is removed to clean up the channel state, and a newSentHTLCIdis introduced to eliminate confusion from duplicate HTLC IDs on inbound channels. -

● LND #9456 adds deprecation warnings to the

SendToRoute,SendToRouteSync,SendPayment, andSendPaymentSyncendpoints in preparation for their removal in the release after next (0.21). Users are encouraged to migrate to the new v2 methodsSendToRouteV2,SendPaymentV2,TrackPaymentV2.

Footnotes

-

In P2SH and the proposed input sigop counting, an

OP_CHECKMULTISIGwith more than 16 public keys is counted as 20 sigops, so someone usingOP_CHECKMULTISIG125 times with 17 keys each time will be counted as 2,500 sigops. ↩